AI image processing systems need massive computational resources and energy for complex image translations. A single high-resolution image transformation demands several minutes and substantial GPU power. This makes immediate processing a major challenge.

Quandela has proposed two groundbreaking solutions which combines AI image processing with photonic quantum computing technology. Our approaches show impressive capabilities in day-to-night scene translation. It either simplify the training or achieves better efficiency than conventional methods. In this piece, we’ll get into how this quantum-enhanced system works and compare its performance to traditional approaches.

Understanding Quantum-Enhanced Image Translation

To go back to the basics, Generative Adversarial Networks (GANs) is a class of generative learning framework where two neural networks compete – the generator generates synthetic data while the discriminator evaluates authenticity. The generator’s goal is to fool the discriminator, while the discriminator tries to identify fake images produced by the generator. These back-and-forth produce increasingly realistic image translations. What’s more, for domain translation, a framework called cycleGAN is used. It comprises the training of two GANs to learn the translation from each domain (e.g. night to day and day to night) while trying to make them approximately inverse from one another (e.g. going from night to day back to night should not change the original image).

To go beyond this approach, we propose two solutions. One relies on a quantum GAN while the other is a quantum-assisted cycleGAN.

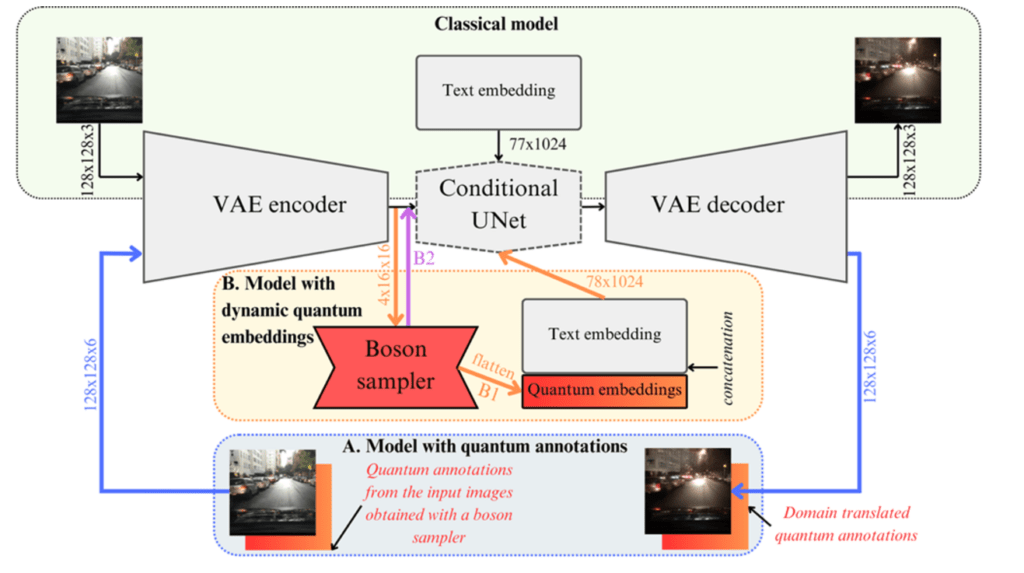

Quantum GANs sits in the latent space – a compressed format holding images’ key features. We exploit the pretrained StableDiffusion VAE technology that ensures accurate reconstruction. In this picture, the classical generator gives way to a quantum circuit known as a boson sampler.

This quantum boost brings clear benefits:

· A simpler design needs just one generator unlike classical methods that require multiple networks

· Parameters drop compared to standard neural networks

· Quantum mechanics’ properties allow natural inverse mapping

Then our quantum-assisted cycleGAN is a hybrid quantum-classical system that keeps the classical model’s base and adds a layer of quantum processing on top in order to deliver better results. The quantum circuit handles extra information during learning and creates better image translations while following similar training patterns. We observe that generated images show fewer artefacts. This is a great result since hallucination is a main problem for state-of-the-art classical generative models.

The system’s modular design makes future upgrades simple as quantum hardware gets better in our two proposed solutions. We can tweak the latent space dimensions through VAE modifications and add new quantum parts as classical architectures grow. This ensures our AI image processing will scale with future developments.

Technical Architecture and Implementation

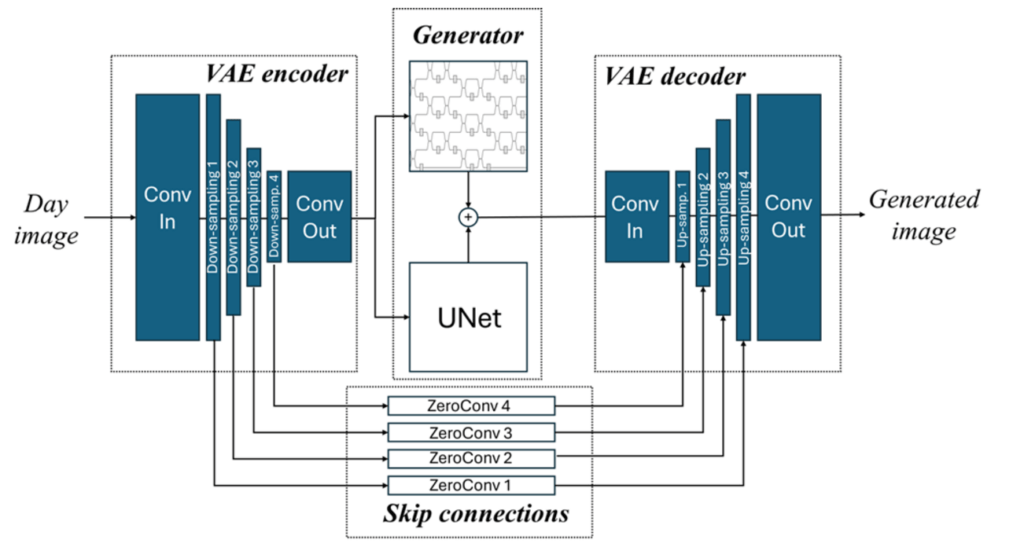

1. For the quantum GAN solution, our system uses a sophisticated architecture that places the quantum generator within the latent space. This design allows quick processing of images. We built a hybrid system that brings together the strengths of quantum and classical computing.

The core architecture has these essential components:

· A pretrained StableDiffusion VAE to reconstruct images accurately;

· A linear optical quantum circuit (boson sampler) that works as the quantum generator;

· Skip-connections linking down-sampling and up-sampling layers;

· A classical UNet integration to preserve details better.

We replaced the standard classical generator with a photonic processor that works directly in the latent space. This change cuts down computational complexity while keeping the output quality. The quantum circuit works with the classical UNet to create better results, and this helps us keep the fine details in transformed images.

Our implementation stands out because of its parameter efficiency. The quantum generator needs nowhere near as many trainable parameters as similar neural networks, which saves resources. On top of that, our modular architecture merges well with evolving quantum hardware – we can adjust the latent space dimension by tweaking the VAE or add new quantum components as they become accessible.

The beauty of this implementation is its straightforward approach. Classical methods need multiple networks with different goals, but our system works with just one generator. This simplified architecture combines with quantum mechanics’ natural properties to provide inverse mapping capabilities, which makes the whole process reliable and quick.

2. For the quantum-assisted cycleGAN, we re-use the classical framework but we supplement the generator with additional information that is processed quantumly from our linear optical quantum device. While retaining the computational complexity of the original approach, we observe quality improvement of the generated images.

Performance Analysis and Benchmarks

Our latest analysis shows remarkable advantages in quantum-enhanced AI image processing. As highlighted above, our quantum generator needs far fewer parameters and has a much simpler structure than traditional neural networks and still delivers good output quality.

- Simplified Training Process: Quantum mechanics’ unitarity helps us achieve inverse mapping without extra training

- Resource Efficiency: The quantum generator keeps parameter counts low even as the circuit grows. The quantum-assisted solution retain the computational complexity but reaches better quality images.

- Better Image Quality: The hybrid approach preserves more details in translated images

Our quantum GAN delivers promising results, but we remain honest about its difficulty. The training process brings its own complexity, though different from classical methods. Traditional CycleGANs need two separate generators with consistency relations, which makes them resource-heavy and slow. Our quantum approach makes the architecture simpler but adds new training challenges.

On the contrary, the hybrid quantum-classical version matches classical methods’ loss patterns but delivers cleaner results. We wish to continue exploring improved performance gains especially as our quantum circuits grow and improve. We conceived these solutions being mindful about their scalability. Despite some training complexity challenges, the system’s modular design allows upgrades as quantum hardware capabilities grow and this is a route we must keep exploring.

Conclusion

Quandela’s quantum-enhanced approach marks a good step in AI image processing supplemented with quantum capabilities. Our research shows how quantum computing can adapt to traditional image processing methods and either reduce computational overhead or deliver better quality outputs.

We highlight two main take-aways:

· Quantum generators seamlessly integrated within the latent space to simplify complex image translations requiring far fewer tunable parameters.

· Output quality improved with fewer artifacts and better detail preservation for quantum-assisted cycleGAN.

This innovation opens new doors for quantum computing in AI image processing. The boost in efficiency and quality sets the stage for exciting applications in computer vision, medical imaging, and autonomous systems.